08/27/2012

Dividing Log File by MAC Addresses

Using regex and array indices to divide appended log entries to separate files

We use a network PXE booting environment to wipe the hard drives of all end of life equipment using a third party disk sanitation utility. The wipe software typically generates a log file for every machine wiped on the network and stores it on a share. The naming convention of the log is based on the MAC address of the on-board ethernet interface appended by -del. An example would be 00:11:22:33:44:55-del.txt. The problem is with certain model laptops the program detects the wireless interface before the ethernet interface but reports the wireless nic's MAC address as "unavailable". Since the logging method uses the first interface mac to name the file the resulting log is created with a generic name and all subsequent logs for the same laptop model are appended to one file called "unavailable-del.txt" instead of being logged individually. With hundreds of appended entries this meant many hours of tedious copy and paste operations to create the separate log files we need for each machine. Unfortunately the wipe software vendor was not able to help in a timely manner. Knowing a few things about powershell I set out to script a solution.

First let's take a look a look at an example of Unavailable-del.txt (Note: The make, model, manufacturer, serial numbers, and mac addresses have been altered in the example log file.) At the top of the log's contents are the headers followed by the appended wipe entries. All fields are tilde delimited. Let's start by setting a few variables and creating our regex to extract the ethernet interface mac addresses as the values to name our new files with.

$logName = "C:\users\name\desktop\test\Unavailable-del.txt" $path = Split-Path $logname -Parent; $path += "\" Set-Location $path [regex]$regex="\w\w:\w\w:\w\w:\w\w:\w\w:\w\w" $log = (gc .\Unavailable-del.txt)

Continue reading >

Copying Log Files to Excel with Powershell

At work we use a third party hard drive sanitation program to wipe hard drives for our internal and external customers. Upon completion of the wipe process, the program generates tilde delimited .txt files and moves them to a shared folder on a log server. Each log contains 88 fields of data and as you can imagine are not very easy to read. I was asked to make the logs more presentable for our customers to read during audits. Being there are hundreds of logs created daily my first thought was to script the solution using Microsoft Powershell's Import-Csv and Export-Csv cmdlets to convert the logs to .csv files so they can be opened in Excel without having to use Excel's import wizard. This can be accomplished from the root of the share containing the logs as follows:

gci *.txt | ForEach-Object { (import-csv $_ -delimiter "~") | Export-Csv $_ -NoTypeInformation }

gci *.txt | rename-item -newname { $_.name -replace ".txt",".csv" }

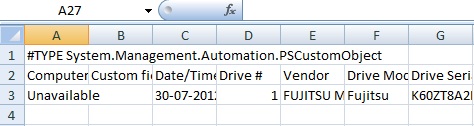

Here we are listing all .txt files in the current working directory and piping the output to the ForEach-Object cmdlet to loop through each file and import to .csv using "~" as the delimiting character to separate each field of information. Notice "Import-Csv" is in parenthesis. This instructs Powershell to import every object in the pipeline to csv first before sending any of the output to the Export-Csv cmdlet. Without the parenthesis Powershell will attempt to output an object in the pipeline at the same time it's trying to input an object to the Export-Csv cmdlet which ends up causing problems. It's worth mentioning in case your not familiar, $_ is Powershell's pipeline variable. It represents every object as it's passed through the pipeline. In this case every .txt file one after another as its passed through the pipeline to Export-Csv. I used the -NoTypeInformation parameter to eliminate the data Type information that ended up being written to the .csv files after conversion. Here is an example of a .csv opened in Excel without using the -NoTypeInformation parameter. The PS Type info is visible in the first row which of course we don't want.

Continue reading >